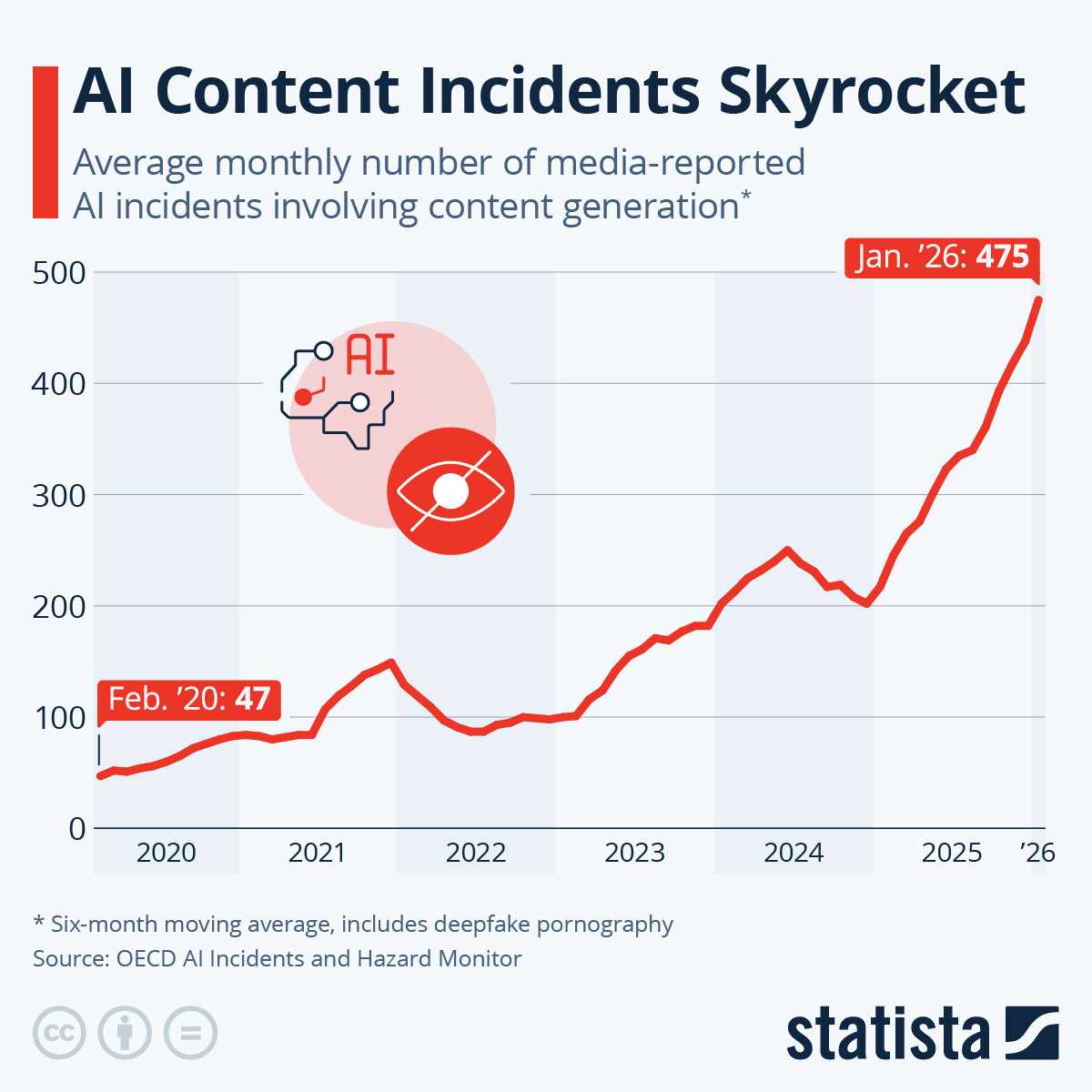

The latest data from the OECD’s AI Incidents and Hazard Monitor reveals a staggering boom in monthly media-reported AI-related content incidents: from just about 50 in early 2020, to over 200 in early 2024 and nearly 500 by January 2026, representing a tenfold increase over the period. As our infographic shows, the rise has been particularly strong since last year (doubling in the last twelve months). This exponential rise underscores the rapid proliferation of AI-generated content worldwide, from synthetic media to deepfakes, flooding platforms like TikTok, X, Instagram or YouTube.

Teens are on the frontline: A 2025 Pew Research Center survey found that two-thirds of U.S. teens now use AI chatbots, with nearly 30 percent engaging with them daily. More concerning, a 2026 Education Week report revealed that 1 in 17 teens (aged 13 to 17) have already been targeted by deepfake content, such as non-consensual synthetic imagery, with over 80 percent of surveyed teens acknowledging the harm caused by such manipulations. Meanwhile, adults struggle to keep up. Research shows that while humans can sometimes detect AI-generated voices or videos, accuracy rates vary widely: from around 60 percent to 90 percent, according to a study published by PMC in Jan. 2026. Thus, many remain vulnerable to believing synthetic content is real, raising urgent concerns about the spread of misinformation, especially as AI tools become more sophisticated and accessible.