Using a search engine nowadays will inevitably render AI-generated results towards the top of the page. This means that not only the many people going straight to ChatGPT, Perplexity or Gemini for their queries, but everybody else as well, is seeing the conclusions of Large Language Model in their online searches. While most people are aware that LLM results can be wrong, tedious double-checking might be skipped when in a hurry or when the results are just what one was looking for and enthusiasm prevails.

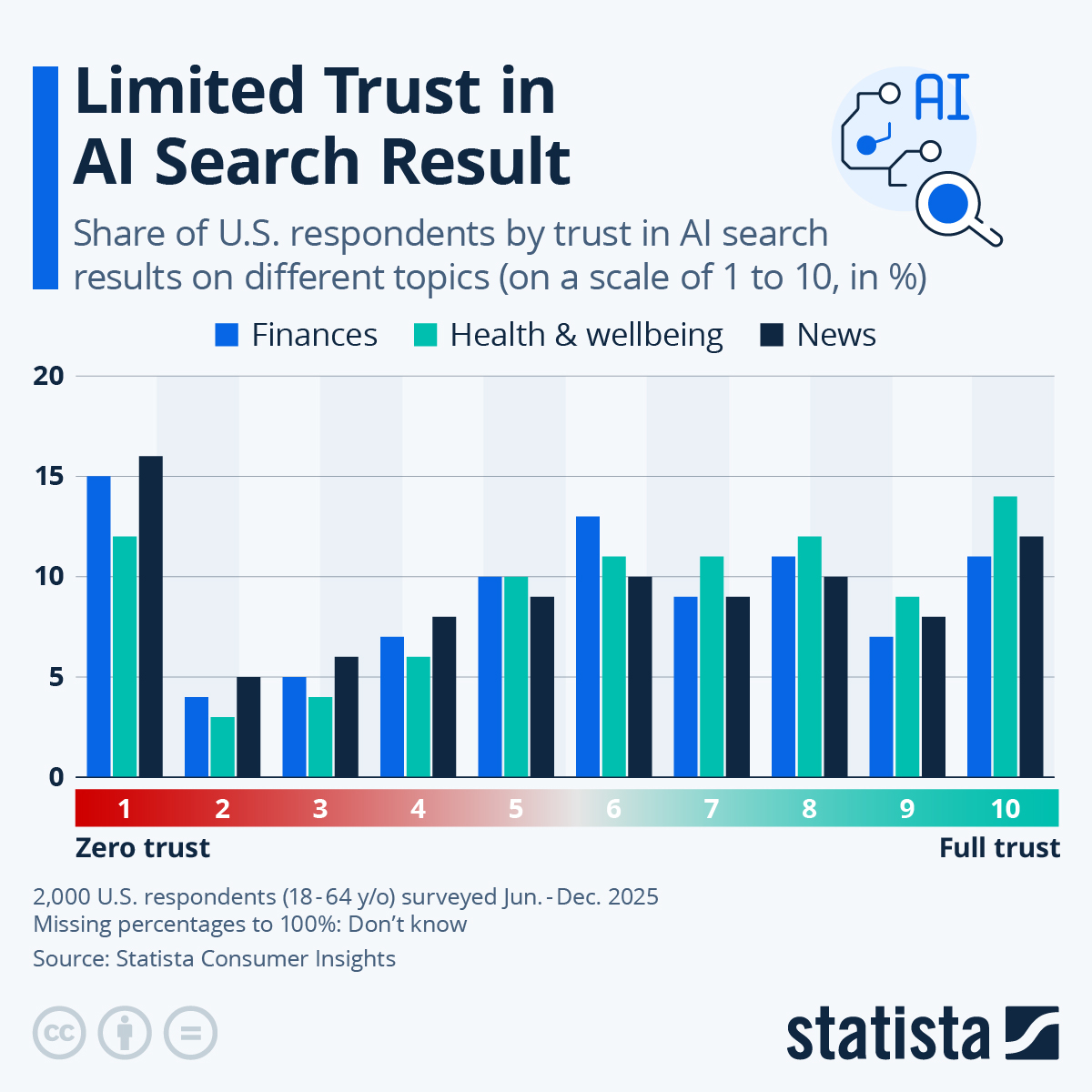

But how trustworthy is the average American of AI search results and does trust vary by search category? Results from a Statista Consumer Insights survey show that only around one third of Americans have a high level of trust in these search results (giving eight to 10 points on a 10-point scale), while around a quarter indicated only one to three points, with one being zero trust. This leaves close to 40 percent of Americans in the middle, trusting AI results somewhat.

Depending on the topic, there was a slight variation in trust, with Americans trusting AI a little more on health on wellness topics (35 percent showing a high level of trust and only 19 percent showing a low level of trust). Finance and news topics elicited a little less trust, with only around 29 percent of respondents picking the upper end of the scale for finances and a high 27 percent saying the had little trust in regards to news coverage.