The U.S. Department of War has launched a new AI acceleration strategy, aimed at securing military AI dominance. The new strategy will “unleash experimentation, eliminate legacy bureaucratic blockers and integrate the bleeding edge of frontier AI capabilities across every mission area”, according to the Department.

The strategy comes amid mounting concern over the risks of using AI in warfare. Scientists at the Bulletin of the Atomic Scientists warn that applications of AI in weapons of war, including in its possible future application to nuclear weapons, is highly concerning. The U.S. has already asked contractors to integrate AI into non-nuclear command and control systems, while Russia has reportedly explored similar applications for nuclear command and control. According to the Bulletin, it is also reported that AI has been used in targeting systems in Ukraine, as well as Israel having reportedly used an AI-based system to create target lists in Gaza.

Public opinion on the topic remains divided in the United States. A recent Gallup survey found that 41 percent of Americans believe AI will worsen national security risks, while 37 percent think it will improve them. Views are similarly split on developing AI-enabled autonomous weapons. Where 48 percent oppose their development, 39 percent are in favor. However, the level of support rises to 53 percent when respondents are asked how they would feel if other countries develop such weapons first.

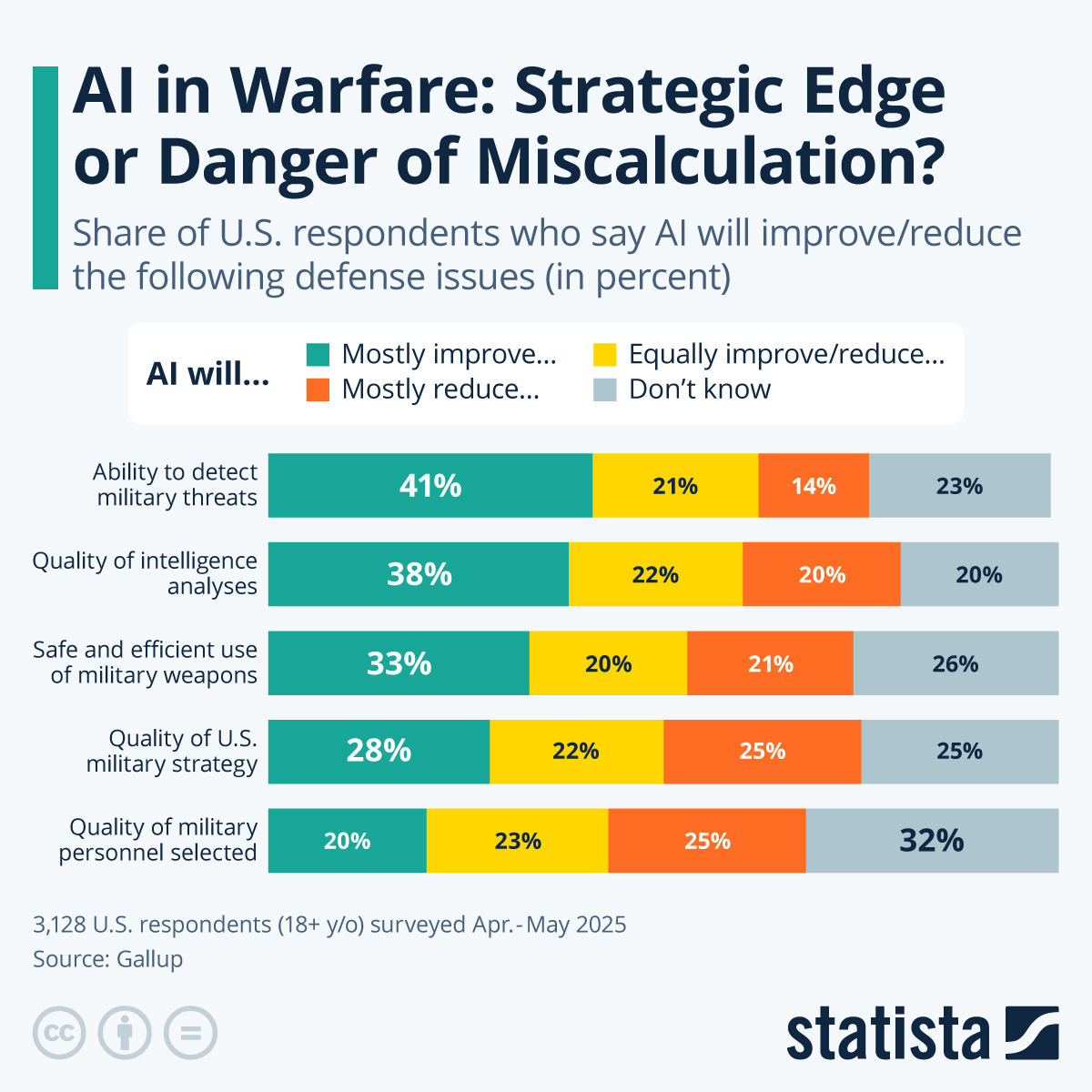

Views also varied when it came to specific use cases of AI in military operations, with widespread uncertainty across all fields. U.S. adults were most optimistic about AI improving threat detection (41 percent) and intelligence analysis (38 percent), while fewer said they believed it will improve the safe use of weapons (33 percent), military strategy (28 percent) or personnel selection (20 percent).